Some shortcuts and hacks are worth it in life and business, and some simply aren’t. Some are harmless, appealing, less expensive, and time-saving in the beginning. Yet, they turn out to cause more problems and hassle than the situation presented initially without the shortcut. But, as one previously-aspiring attorney is coming to find out, AI in the legal realm is more of the latter—at least for now. So if you are an attorney or a marketing professional who works with attorneys, you’ll want to make a note of this case and learn from another’s mistakes instead of venturing down that path or similar ones yourself.

“The World’s First Robot Lawyer”

San Fransisco’s DoNotPay is “the world’s first robot lawyer,” according to founder, CEO, and software engineer Joshua Browder. The tech company was founded in 2016 by Browder, a Stanford University undergraduate and 2018 Thiel Fellow who has received a remarkable amount of media attention in his short career. Browder says he started the company after moving to the U.S. from the U.K. and receiving many parking tickets that he couldn’t afford to pay. Instead, he looked for loopholes in the law he could use to his advantage to find ways out of paying them.

He claims that the government and other large corporations have conflicting rules and regulations that only stand to rip off consumers. With DoNotPay, his goal is to give a voice to the consumer without consumers having to pay steep legal fees. According to the company’s website, they use artificial intelligence (AI) to serve approximately 1,000 cases daily. Parking ticket cases have a success rate of about 65 percent, while Browder claims many other case types are 100 percent successful.

DoNotPay claims to have the ability to:

- Fight corporations

- Beat bureaucracy

- Find hidden money

- Sue anyone

- Automatically cancel free trials

The company has an entire laundry list on its website of legal problems and matters its AI can handle, such as:

- Jury duty exemptions

- Child support payments

- Clean credit reports

- Defamation demand letters

- HOA fines and complaints

- Warranty claims

- Lien removals

- Neighbor complaints

- Notice of intent to homeschool

- Insurance claims

- Identify theft

- Filing a restraining order

- SEC complaint filings

- Egg donor rights

- Landlord protection

- Stop debt collectors

DoNotPay: Plagued with Problems

While his intentions might be relevant or even noble to some, they are landing Broward in his own legal hot water for which there may currently be no robot lawyer to represent him.

State Bars Frown on AI in the Courtroom

In February, a California traffic court was set to see its first “robot lawyer” as Broward planned to have an AI-powered robot argue a defendant’s traffic ticket case in court. If his plan had come to fruition, the defendant would have worn smart glasses to record court proceedings while using a small speaker near their ear, allowing them to dictate appropriate legal responses.

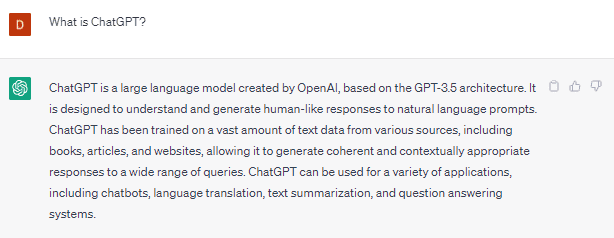

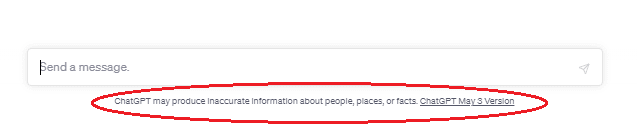

This unique and innovative system relied on AI text generators, including the new ChatGPT and DaVinci. While in the courtroom, the AI robot would process and understand what was being said and generate real-time responses to the defendant. Essentially, they could act as their own lawyer with the help of DoNotPay’s robot lawyer— a technology that has never been used within a courtroom.

Many state bars and related entities quickly expressed their extreme disapproval when they learned about Browder’s plans. Multiple state bars threatened the business, even threatening prosecution and prison time. For example, one state bar official reminded him that unauthorized practice of law is a misdemeanor in certain states that can come with a punishment of up to six months in county jail.

State bars license and regulate lawyers in their respective states, ensuring those in need of legal assistance hire lawyers who understand the law and know what they are doing. According to them, Browder’s AI technology intended for courtroom use is clearly an “unauthorized practice of law.”

DoNotPay is now under investigation by several state bars, including the California State Bar. AI in the courtroom is also problematic because, currently, courtroom rules for federal and many state courts don’t allow the recording of court proceedings. Even still, Broward’s company offered $1 million to any lawyer to have its chatbot handle a U.S. Supreme Court case. To date, no one has accepted his offer.

DoNotPay Accused of Fraud

As if being reprimanded by several state bars isn’t bad enough, Broward and DoNotPay are now facing at least one, if not multiple, class action suits. The silver lining is that perhaps Browder will finally get to test his robot lawyer in court.

On February 13, 2023, Seattle paralegal Kathryn Tewson filed a petition with the NY Supreme Court requesting an order for DoNotPay and Broward to preserve evidence and seeking pre-action discovery. She plans to file a consumer rights suit, purporting that the company is a fundamental fraud.

What’s even more interesting is that Tewson notes in her filing that she consents to Browder using his robot lawyer to represent himself in this case and even seems to dare him to do so:

For what it is worth, Petitioner does and will consent to any application Respondents make to use their “Robot Lawyer” in these proceedings. And she submits that a failure to make such an application should weigh heavily in the Court’s evaluation of whether DoNotPay actually has such a product.

Through her own research, Tewson has accused Broward of not even using AI but piecing different documents together to produce legal documents for consumers who either believe they are receiving AI content or real attorney-generated content. Suppose DoNotPay is actually using AI, as Broward claims. In that case, it’s obviously not producing quality work products, and consumers are starting to notice.

A Potential Class Action Lawsuit

As if these legal issues weren’t already enough, next on the DoNotPay docket is a potential class action lawsuit. On March 6, 2023, Jonathan Faridian of Yolo County filed a lawsuit in San Francisco seeking damages for alleged violations of California’s unfair competition law. Faridian alleges he wouldn’t have subscribed to DoNotPay services if he knew that the company was not actually a real lawyer. He asks the court to certify a class of all people who have purchased a subscription to DoNotPay’s service.

Faridian’s lawyer Jay Edelson filed the complaint on his behalf, alleging that he subscribed to the DoNotPay services and used the service to perform a variety of legal services on his behalf, such as:

- Drafting demand letters

- Drafting an independent contractor agreement

- Small claims court filings

- Drafting two LLC operating agreements

- An Equal Employment Opportunity Commission job discrimination complaint

Faridian says he “believed he was purchasing legal documents and services that would be fit for use from a lawyer that was competent to provide them.” He further claims that the services he received were “substandard and poorly done.”

Edelson has successfully sued Google, Amazon, and Apple for billions. The NYT refers to him as the “most feared lawyer in Silicon Valley.”

When asked directly if DoNotPay would be hiring a lawyer for its defense or self-defending in court relying on its own tools, Browder said, “I apologize given the pending nature of the litigation, I can’t comment further.” Even still, he recently tweeted, “We may even use our robot lawyer in the case.”

What Lawyers and Marketing Professionals Can Learn From DoNotPay’s Mistakes

Stanford professors say that Browder is “not a bad person. He just lives in a world where it is normal not to think twice about how new technology companies could create harmful effects.” Whether this is true or not remains to be seen. In the meantime, attorneys and marketing professionals have a lot they can glean from Broward’s predicaments. They certainly need to think twice about the potentially harmful effects of AI technology use for several reasons.

DoNotAI

The overarching theme that we can take away from Broward and his business’s legal predicaments is that AI isn’t something that law firms or attorneys (or even those aspiring to be in the legal profession) should dabble in, at least for now. It isn’t worth using AI, such as ChatGPT or Google’s new Bard, whether for online form completion like DoNotPay or marketing content like blogs or newsletters. You don’t want to give the impression that something was drafted or reviewed by a licensed attorney when in reality, it was essentially written by a robot. On the other hand, you also don’t want to be accused of piecing legal documents together or performing shoddy work as an attorney because you are using AI.

While relying on AI might seem harmless in some areas, it could later prove problematic, as it has for Broward. For example, using AI for any of your work or marketing content could:

- Tarnish your reputation in your community and with your colleagues and network

- Have your actions called into question by your state bar association

- Provide consumers with the wrong or simply invaluable information, proving disastrous for your marketing and SEO efforts

- Lower your SEO rankings and decrease your potential client leads

- Cause legal action for malpractice or fraud

Adhere to Professional Standards

Always remember to adhere to your professional standards and codes of conduct. If anything related to the use of AI seems questionable or unethical, treat it as such and steer clear of it. The use of AI as a substitute for the advice and counsel of a bona fide attorney, whether online, in the courtroom, or in representing your clients, isn’t acceptable under any state bar at the current time. Taking shortcuts that rely on AI isn’t worth facing professional consequences up to and including having your license suspended or terminated.

What This Means for Legal Content

AI is permissible and even valuable for some minor legal content generation tasks, such as determining keywords or composing an outline. However, these new and still emerging technologies shouldn’t be used to draft entire blog posts, white papers, newsletters, eBooks, landing pages, or other online marketing copy. There are several reasons to avoid this:

- AI-generated content may soon carry a watermark detectable by web browsers

- We don’t yet know how Google will react to such content—although Google currently claims the quality of the content is more important than how it is produced, AI may not be generating quality content, and Google could change its stance at any point

- State bars may view AI-generated marketing content as unethical or fraudulent

- The use of AI-generated content could constitute the unauthorized practice of law in some states

- AI content may provide incorrect information and come across as cold or impersonal, something attorneys definitely want to avoid when marketing to potential clients

Do You Need Help Producing Original Content?

If you are an attorney or marketing professional who needs help producing legal content, Lexicon Legal Content can help. Don’t cut corners and put yourself at risk by turning to AI-generated content. Our team of attorney-led writers can produce valuable content for your website or other marketing efforts that pass not only plagiarism detection but also AI detection. All content is either written or reviewed by a licensed attorney. Talk to a content expert today about we can meet your legal content needs.